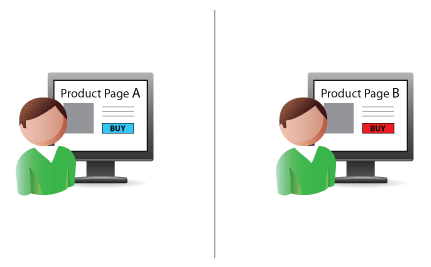

The use of A/B testing, where customers get different versions of the same live site to interact with, is an increasingly popular tool for website owners. However, while the company may get useful feedback and data from this testing is it really the best way forward?

While the results might look black and white, A/B testing still leaves a lot of unknowns. The test designs can be subject to bias from the person setting up the test, while customers may not get the best service they were expecting. It also leaves the question of how many testing iterations do you go through, and what impact will this have on the goodwill of your customers.

Pros of A/B testing

Get clear evidence

It’s easy to see how many users complete a transaction with site A over site B. The evidence is based on real behaviour, so is hard data of the type that money men love (and can be presented in a simple-looking, hard hitting chart).

Test new ideas

If you have an innovative idea for an existing site, A/B testing provides hard proof as to whether it works or not. However, you will need to implement that big idea in hard code before you can test it this way.

Optimise one step at a time

If you run a large site, or many sites, then A/B testing is a fantastic opportunity to “patch” test, by starting out in a small corner and then working up to the main pages of the site. However, can smaller site users with less traffic afford to gamble with real users by giving half of them a site experience that might not be optimal?

Answer specific design questions

Are green buttons better than red ones for your site design? This and many other questions can be answered by A/B testing as they allow the designer to test different colours, placement of buttons, page layouts, different images which are all good areas to slowly improve a website.

Cons of A/B testing

Can take lots of time and resources

A/B testing can take a lot longer to set up than other forms of testing. Setting up the A/B system can be a resource and time hog, although third-party services can help. Depending on the company size, there may be endless meetings about which variables to include in the tests. Once a set of variables have been agreed, designers and coders will need to effectively work on double the amount of information. In addition, in order to get conclusive results, tests can take weeks and months for low-traffic sites.

Only works for specific goals

This kind of testing is ideal if you want to solve one dilemma, which product page gives me the best results? But, if your goals are less easy to measure pure A/B testing won’t provide those answers.

Doesn’t improve a dud

If your site had usability problems to begin with and the variations are just an iteration of that, it is likely to still have the fundamental flaws that your other site contained. A/B Testing won’t reveal these types of flaw or reveal user frustration and you won’t be able pick up on the reasons behind the site’s problems. Just because A resulted in more sales, it is only in relation to B. Removing the original usability issue could be much quicker to identify and result in much better results.

Could end up with constant testing

Once the test is over, that is it. The data is useless for anything else. Further A/B tests will have to start from a new baseline and other types of testing will only likely be applied to the more successful site, when they could have found equally useful information from the rejected version.

The best use of A/B testing

When used with other testing methods, A/B testing provides a valuable tool in refining a working design and finding out what attracts your users or helps them complete the processes on pages. However, it cannot measure ease of use, frustration or other elements, so cannot be relied on as the total solution. Therefore, utilise some form of usability testing to better understand the users’ frustrations and issues, then use A/B testing to test the different solutions.

What are your experiences of A/B testing, were they as useful as you had hoped?