In 2006, the face of video gaming changed when Nintendo introduced its Wii console. This allowed the machine to sense the player’s input as they moved the controller around. Suddenly, players could jump, wave, bat, swordfight and perform many other actions through motion sensing technology. More importantly, it helped the public get used to the idea of a computer sensing their actions.

Now, Sony has unveiled a higher-fidelity equivalent called Move, while Microsoft unveiled its Kinect gadget for the Xbox 360. Kinect is of particular interest as it has a camera and infra-red sensor that monitors the user’s actions. Without any kind of controller, users can interact with games via gestures and motion.

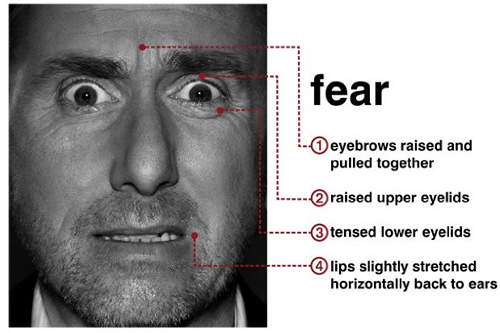

Beyond games and novelties, this technology, with software developed by PrimeSense, an Israeli company, will soon be flooding into television sets, computers and public kiosks. At its simplest, end users can interact with systems via hand and arm movements. But, with a little effort and further refinement in fidelity, developers can use the cameras and clever software to focus on where the user is looking, or it could be trained to focus on the face, looking for emotional cues.

This information can be fed back to system designers (be it interactive menus, websites, kiosks or banking ATMs) to help them design better systems, interfaces and improve user experience. Mixing the two ideas, if users are observed to ignore one part of a website, then designers will learn this through feedback and can work on enhancing that area through visual design. If sensors detect confusion in people reading part of a site or document, then what they are looking at can be highlighted and checked for clarity. This has some fascinating implications for the future of user centred design.

In the not too distant future, banking systems can check for honesty in customers withdrawing money (think having Tom Roth’s character from Lie To Me in every ATM) to detect card fraud. At a more practical level, interface designers can have a field day building systems with all sorts of practical feedback loops, as David Leggett’s UX Booth article demonstrates.

So, without getting all 1984 on us, what do you expect from advances in this technology that could assist user experience development, interface and site design?