What is it?

Loop11 is an online, remote usability testing tool. In simple terms Loop 11 allows you to create an online survey that includes tasks for users to complete on a website of your choice. You have the ability to mix questions with tasks in any order you wish, and for each task you can set a goal for the user to achieve i.e. find a size 10, blue women’s jumper for under £30. You then have to set the URL that they will start on at the beginning of the task. There is a demo of how it works on the website which takes you through what your users will see when they complete a test.

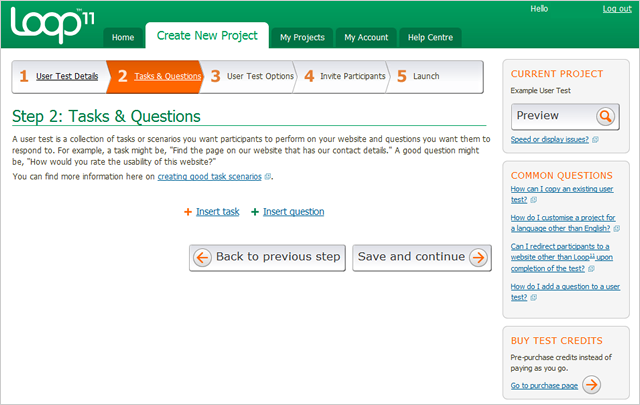

A step by step process of setting up your test

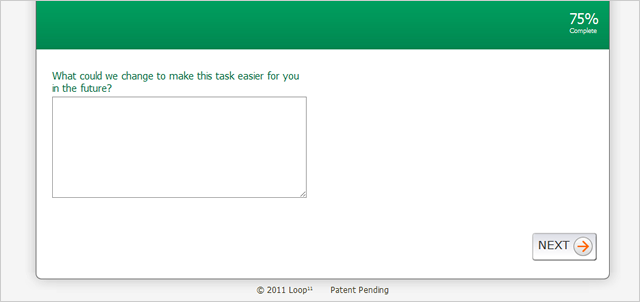

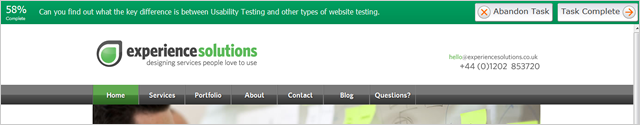

Below are two examples showing how the test looks to the user when they are completing a task (Figure 1) or answering a question (Figure 2). On each task the objective is always displayed at the top along with the progression buttons. When asking a question, Loop11 gives you various question types which allow the users to answer in an assortment of different ways, the example I have shown represents a normal text field answer/comment box.

Figure 1. An example of how a task would look like to a user

Figure 2. You can insert questions after, or before the tasks, above is an example of a text field question

What are its advantages?

This is the closest tool we have found so far to an actual usability test. Loop11 is one of the only sites we have found that really lets you create something similar to the usability tests we regularly carry out. You have control over the design of a proper test script which is the main reason we have found it to be so useful.

You don’t have to be there with the user (in the same room). Loop11 tests are un-moderated so all you have to do is design it, then set it live and spread the word by sending a link to your selected participants. Users can then complete the tasks in their own home at their own pace. Its main advantage over face to face usability tests is that it allows you to test as many users as you want.

Loop11 isn’t free, but it is cheaper than moderated usability testing. You also don’t have to spend much money on incentives as users are participating when they want, and where they want (we’ve written more about this on UXbooth). We still included a small incentive as a thank you for users spending their time completing the test, and as we made sure the test itself wasn’t long it worked out rather well for everyone.

You can test Loop11 on any device that browses the internet. We haven’t tested this but we would assume that in most cases this would be correct as Loop11 uses the browser and is fully online so it shouldn’t matter too much what device you use it on.

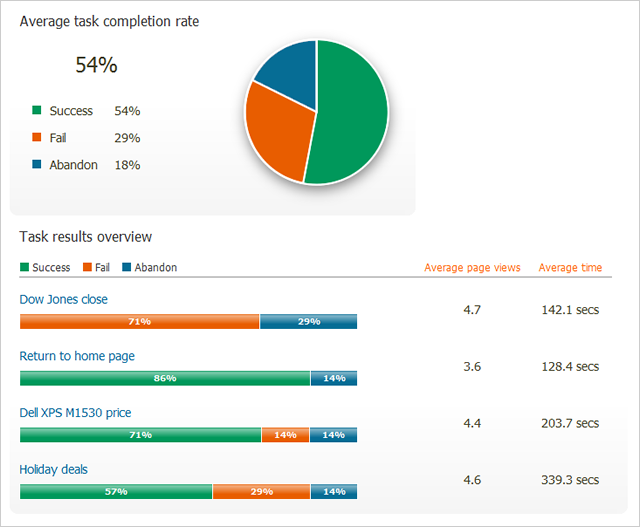

View ready made reports on participants completing your test. After you have set the test live and participants start completing it you can view reports which show how many people succeeded in completing tasks, how long it took them, and how many pages they went through to get there. This information comes in 3 exportable formats (Excel XML, PDF, CSV, and Excel XML with participants) so that you have access to the original data to do with as you please. The PDF option also exports the report version which includes graphically presented results for the overall test, and for each task itself, however we found that the excel document of raw data was the most useful as it allowed us to work with the data to produce a report with the information we required. We could then brand it and use it within our projects.

A sample version of the PDF report that Loop11 exports after a test is finished

What are its limitations?

Once a test is published there is no going back. You can’t edit the test after publishing it; you can only delete it altogether, so you better get it right the first time! We would recommend thinking carefully about the order of your test before adding them to the test list. Re-test, and double check as many times as possible in preview mode before putting it live.

Judging the success or failure rate can be tricky. The site tests live websites so for each task you have to set the URL you want users to start from. In order to track success and fail tracking you need to add URLs which specify the pages users reach which you consider a to count as a success. This can be problematic if your task can be completed in a variety of different ways. If you don’t anticipate them all, you could record a failure even if they succeed.

Tasks need to be carefully designed. The design of each task becomes critical when doing an un-moderated test especially with this tool as it needs to be much less fluid than a typical face to face usability test where normally we would deliberately allow users the freedom to complete the task as they would naturally. With Loop11 you are forced to be more quantitative with your approach to get a more defined fail/success criterion. Therefore we found that the tool forced us to design tasks to be much more basic and definite. For example, in a face to face test we might ask users to define the problem they typically face and then show us how they would solve that problem using the site. With Loop 11 we would design the task to answer a very specific question we know they can find an answer to on a specific page.

Slow loading times on some websites. Sometimes the website you may want to use will perform slowly which could affect how likely people are to complete the test. We also noticed it crashed on a few sites too. We recommend checking how well a site performs in the preview mode before you commit to purchase. Loop11 do provide a piece of JavaScript that you can insert into the body text of the site to enhance the speed. Unfortunately we couldn’t do this on the site we were using which is a drawback if you are testing a site without access to the backend.

You might get more people participating than you bargained for. One quite costly drawback, especially for those of us who incentivise participants, is that despite setting a limit for the number of tests it only cuts them off after that number have completed the form. However, it cannot then stop people who have already started the test whilst the 20th (in this example) person was completing it. Therefore you may end up paying out for slightly more people than you originally wanted (we set a limit of 20 but had to pay 24 participants).

You don’t know who really completed the test properly. Probably the most obvious limitation when it comes to un-moderated testing is. Obviously you would like to think that anyone who sat down to do the test would want to do it properly, but you will get those who just rush through the tasks and questions to gain the incentive at the end. Normally you can look at the results and usually spot the person who you think didn’t complete it properly, the people who took the shortest time to complete their tasks and didn’t write any comments. The question you then have to ask yourself is whether you discount them from the testing?

When we recommend using it

If you’re looking for a quick, high level view of how a website is doing then this would be a good solution for you. It also helps if you are having problems testing users who are geographically spread or too time poor to meet one to one, by using Loop11 you can overcome this by testing anywhere that has internet accessibility anytime they want. Due to Loop11’s nature it is a useful tool for benchmarking a site, finding out where users are running into an issue to then carrying out some face to face usability testing to find out exactly where the problem lies. The scenario where we are likely to use it is to monitor the usability of sites we have already worked on. However, as the tool has strict success/fail metrics it is only really suited to carefully designed tasks which have a clear answer.

See anything I’ve missed? Just let me know!